[Python] ChatGPT JumpCloud Assistant (unofficial / beta) with a FastAPI bridge on AWS Lightsail

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

11-20-2023 04:44 AM

Hi Community Folks

Yes, I still owe you an update on how to host the "Software Self Service Installation Portal [SSSIP}", but I ran into an issue with OIDC here and don't have a solution yet. After some frustration about it, I started working on another project over the last evenings and the weekend:

I made myself a bit more familiar with the recently launched OpenAI ChatGPT Assistants and they are basically an evolution of ChatGPT Plugins, but way better, much more flexible and also easier to create.

I repurposed the idea of my previous experimental ChatGPT-Plugin (article here) and brought it a bit further.

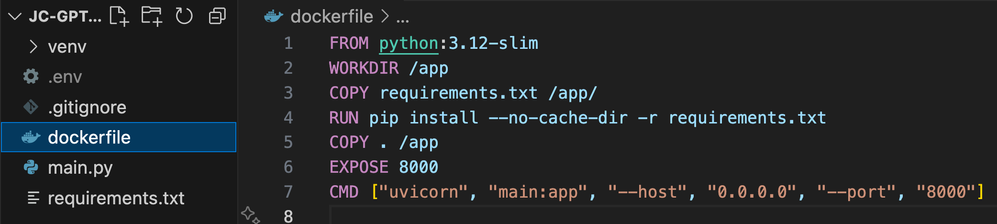

One major objective I had was to make this more consumable and therefore I built it from scratch as a docker image and tested frequently on AWS Lightsail - which is friendly with docker images and pretty easy to manage.

What does it do and how does it work?

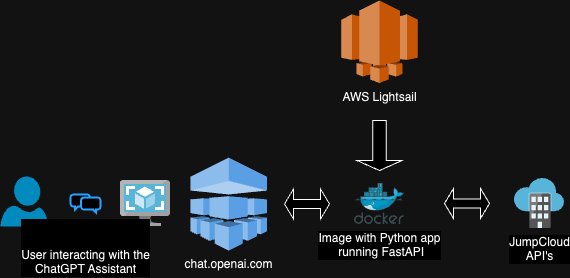

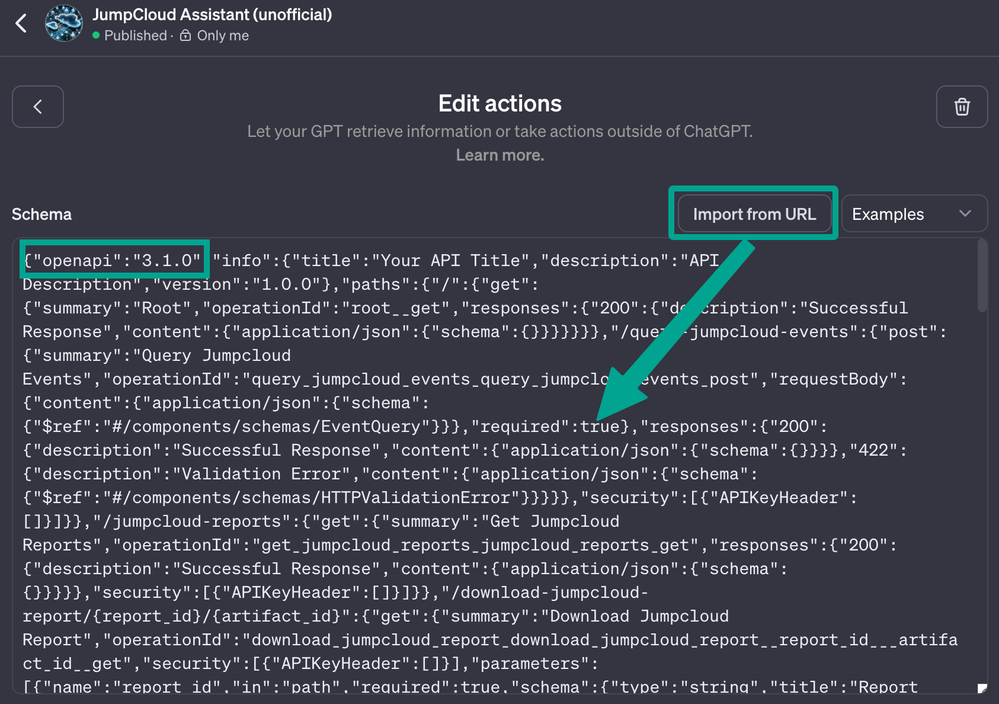

Once you've created a custom ChatGPT Assistant, you can integrate the customised API with the assistant. The OpenAPI specification creates Actions within the Assistant.

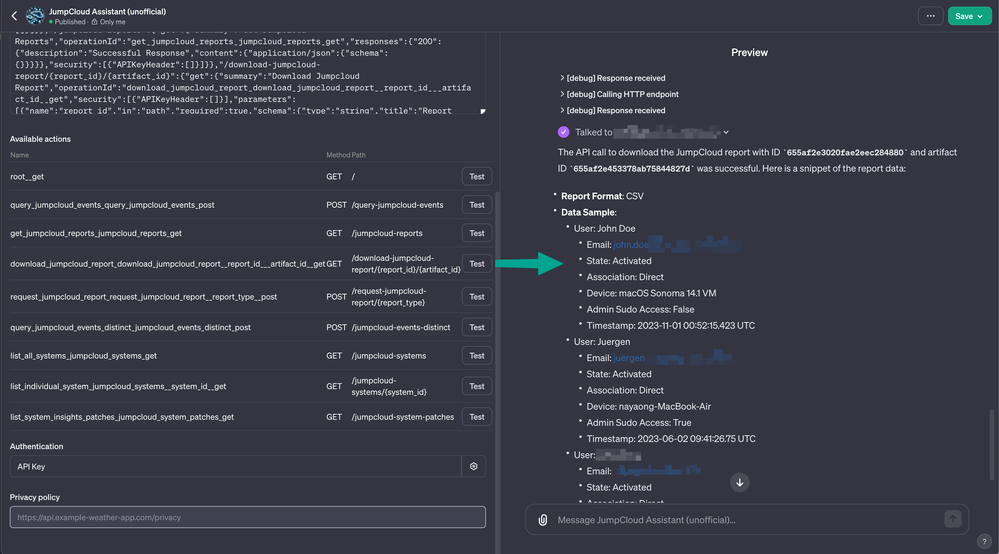

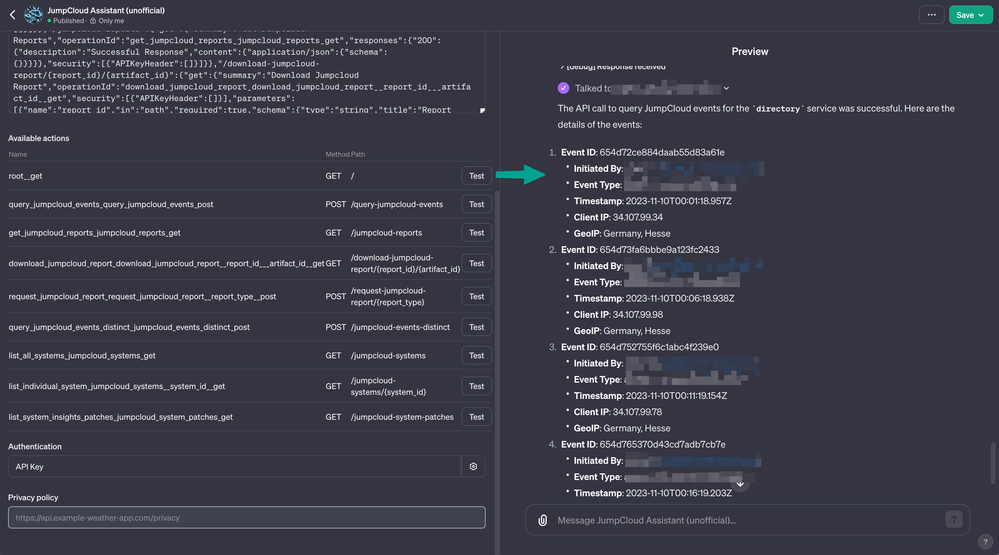

When you run these Actions, API-call will be made to the instance running on AWS Lightsail and then literally relayed towards JumpCloud API's for further action.

You may wonder: Why this way?

I took this approach for various reasons:

- Currently, JumpCloud API's are defined as OpenAPI 2.0 Swagger files and therefore they are not directly compatible with OpenAI's GPT Assistants which do require OpenAPI 3.x

- I want API calls to be custom and gated. If it were possible to integrate directly, I wouldn't want to expose my JumpCloud API key within the Assistant itself and I also want to be able to control better which API-calls can be made and how - which also gives some more control over the response and how the Assistant can interact with them.

- I might want to use the Python App 'in between' to do other things in future.

Back to the 'What does it do?':

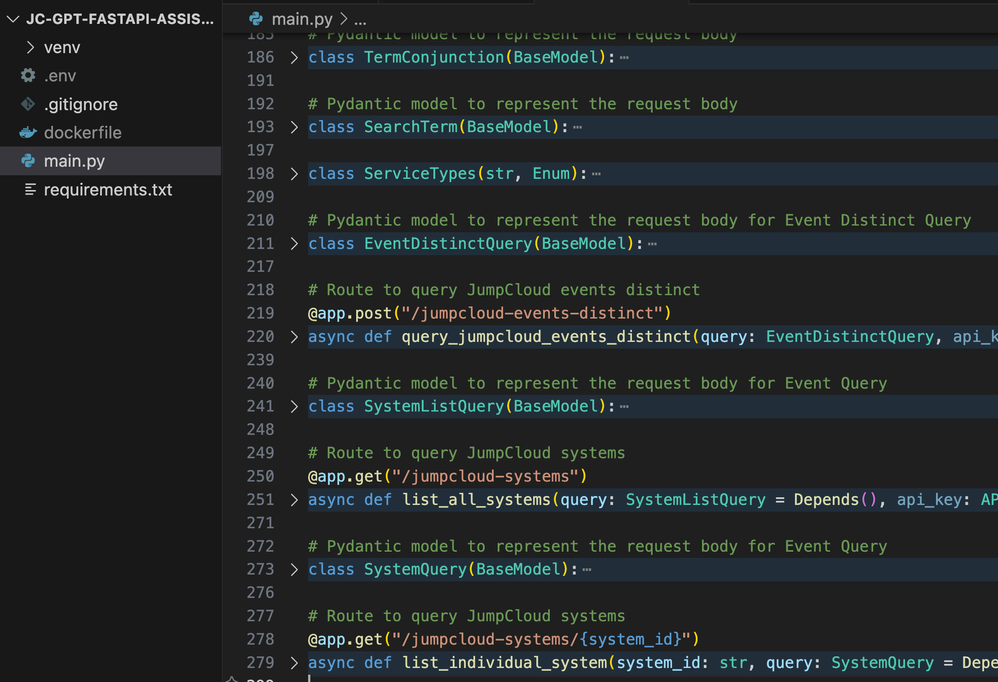

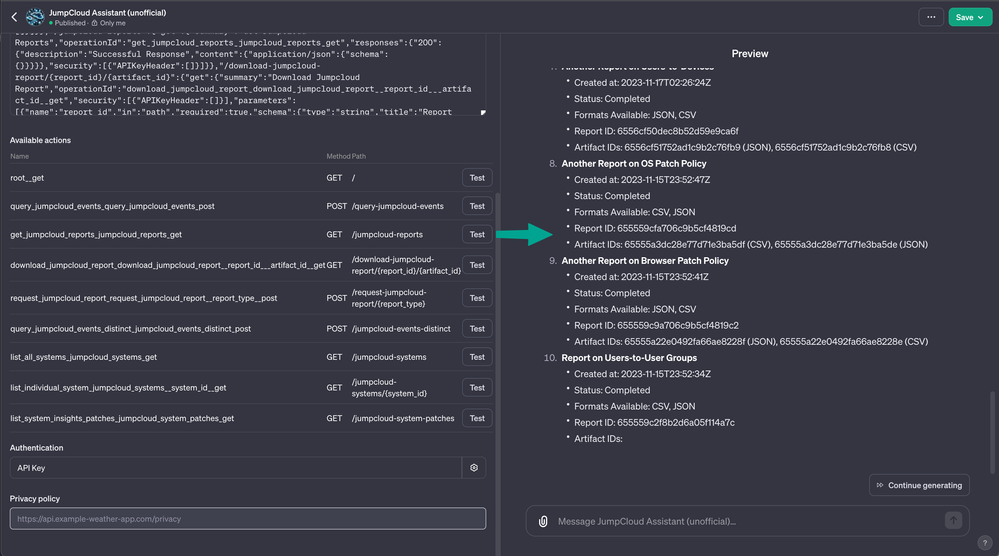

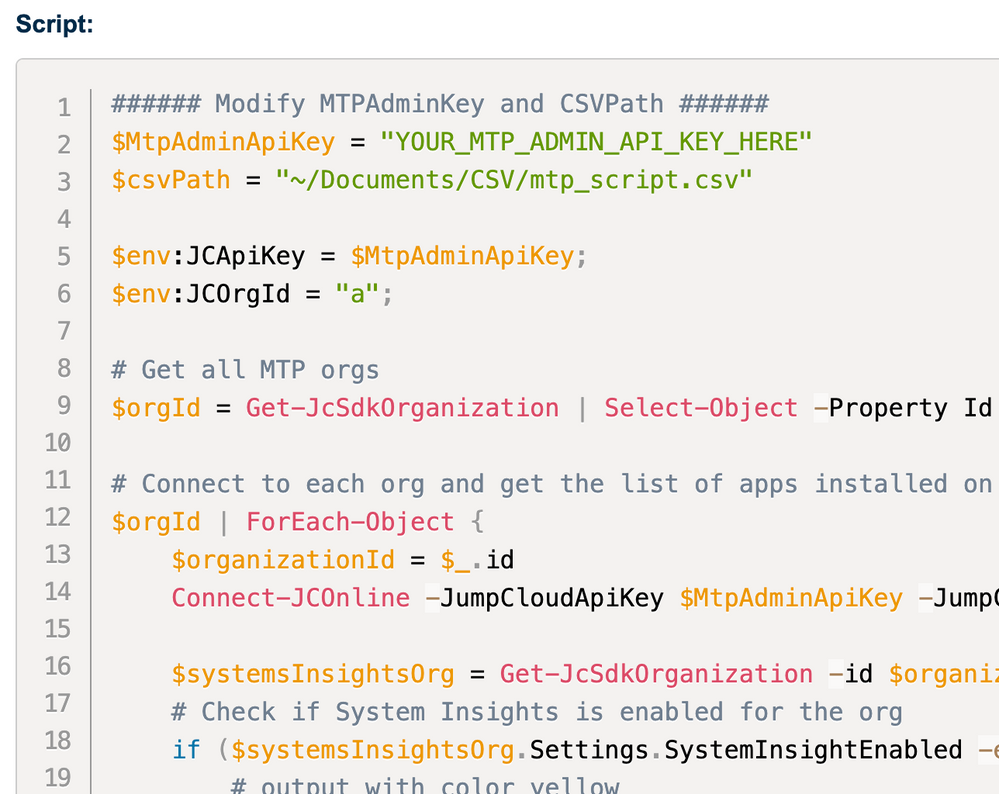

Each available `Action` within the Assistant is a so called 'route' within the Python App. It mimics the JumpCloud API's, sometimes with modifications.

By using FastAPI, it generates an OpenAPI v3 specification on the fly based on the respective code. This custom API is then exposed (including a custom API key) and it's imported via an URL into the the GPT Assistant.

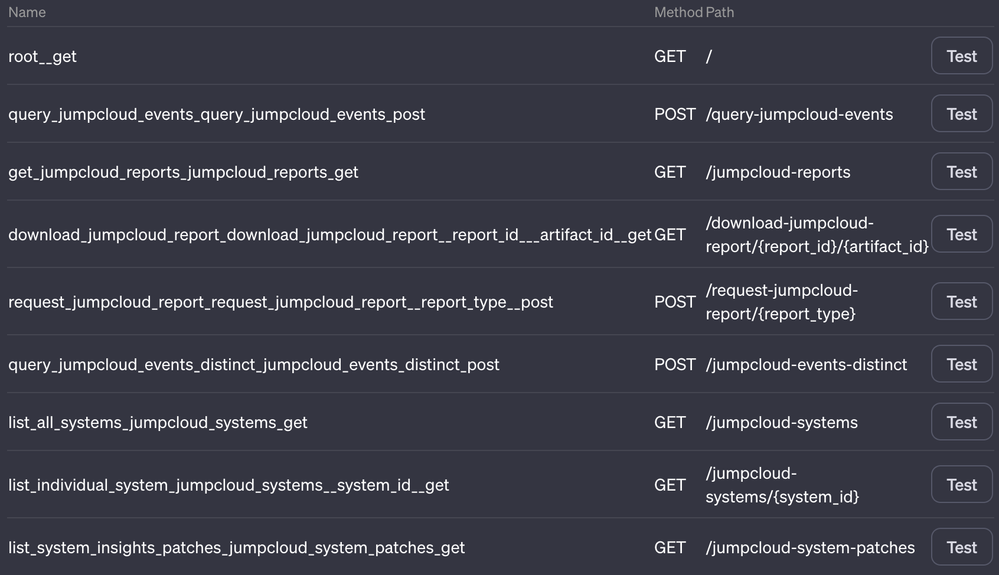

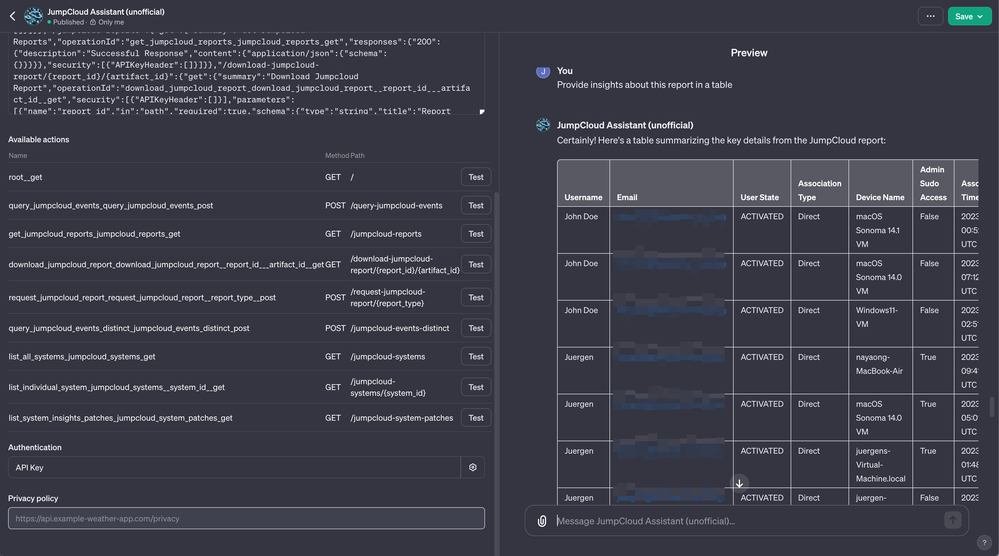

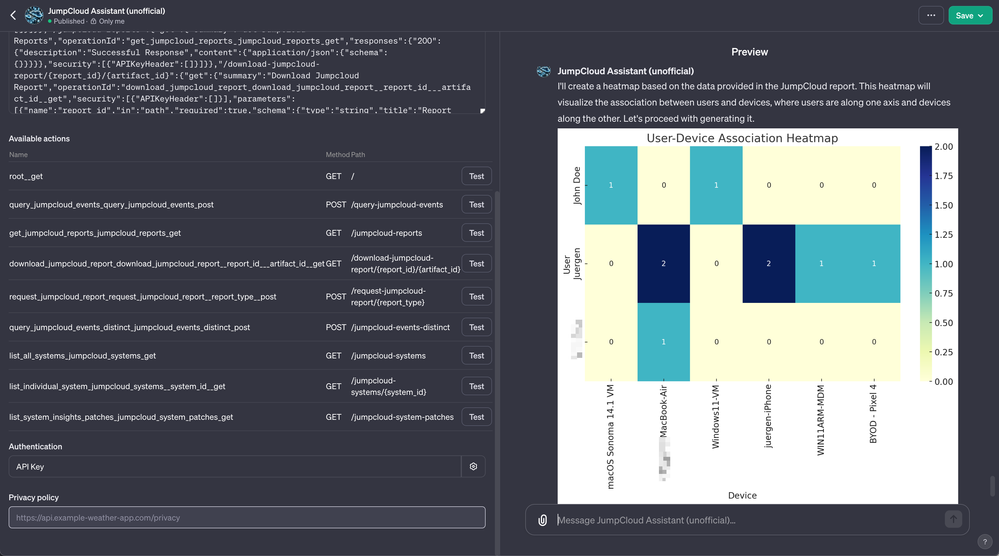

Which Actions does it have right now?

As of now in this first version the following Actions have been implemented:

How does the setup look like?

As mentioned earlier it's basically looks like this:

Your ingredients are:

- ChatGPT Plus Subscription (for $20/month)

- Python App to create the FastAPI Endpoint servicing the GPT Assistant via OpenAPI v3 specification

- Hosted within a Docker Image running on AWS Lightsail (Nano Instance for $7/month)

How did I get there?

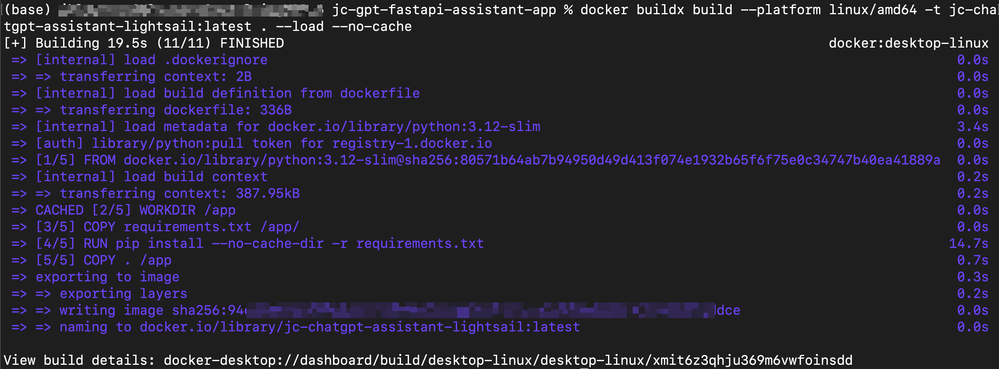

- I started building the functionalities locally with VSCode in a Virtual Environment

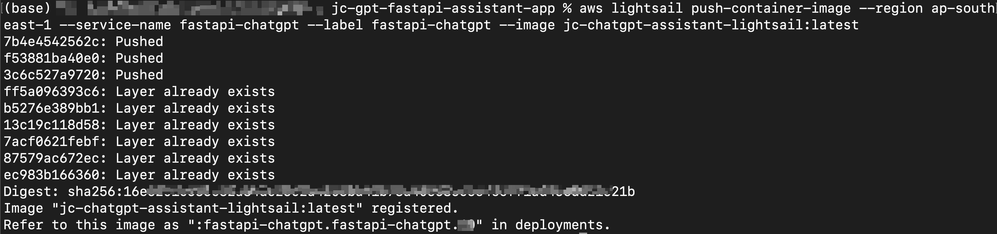

- Then I created the docker image and brought it over to AWS Lightsail

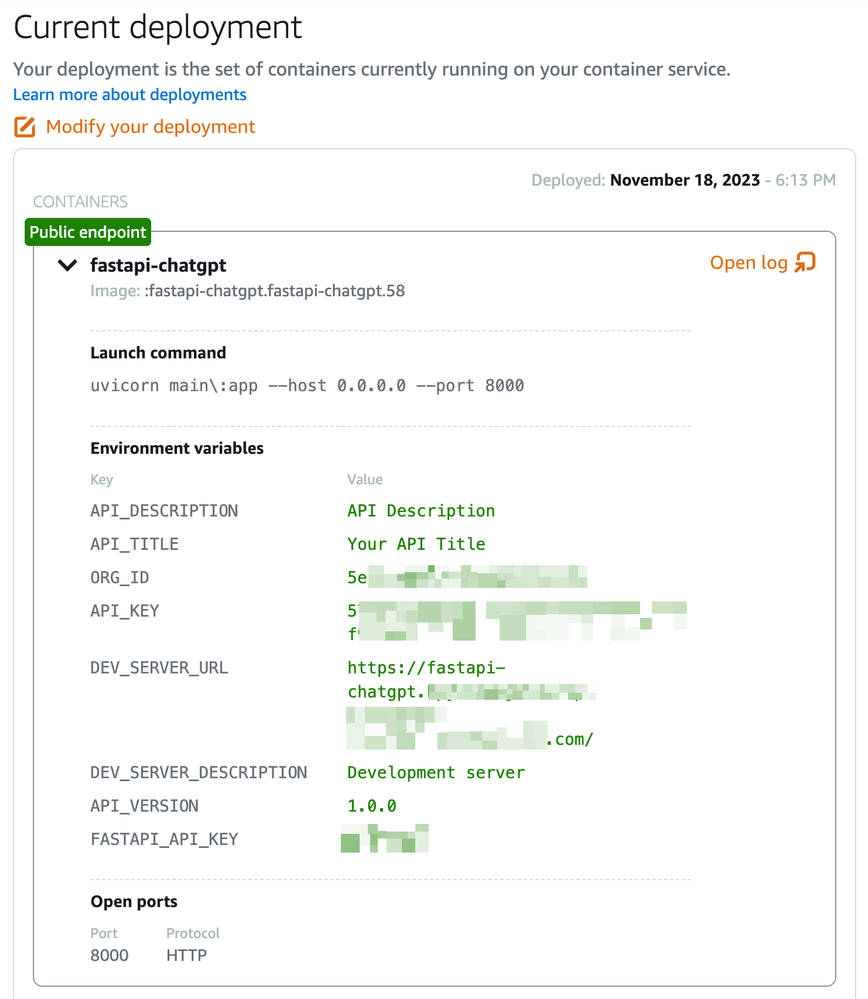

- There you can start the deployment including all required Environment Variables

- The deployment creates a public endpoint which serves the OpenAPI specification

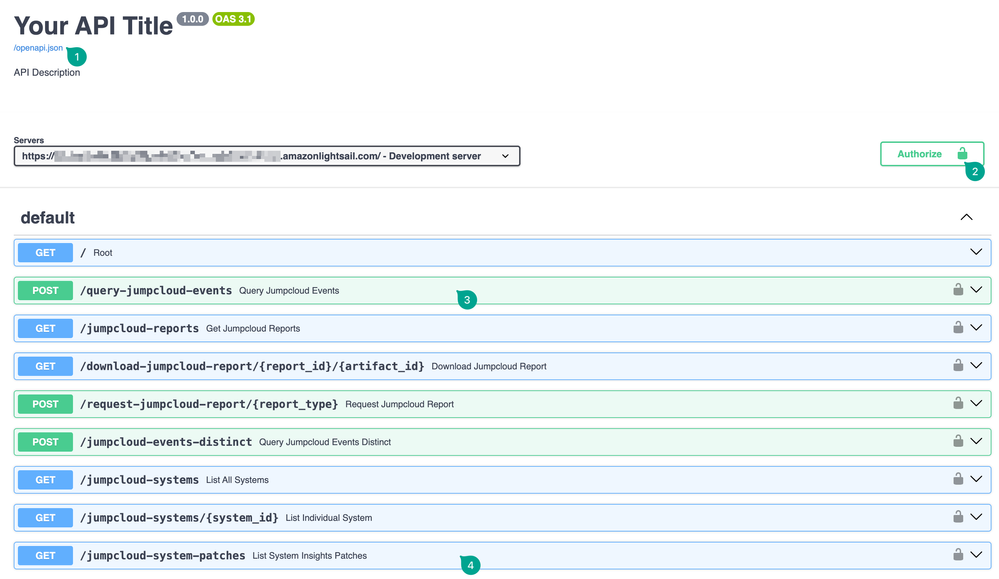

(1) This URL pointing to the `OpenAPI.json` will be imported (see below)

(2) Requires the Custom API Key (also used when integrating with the Assistant)

(3) to (4) The available routes serving the Actions in the Assistant - The `OpenAPI.json`-file is then imported into the GPT Assistant to establish the Actions

Last question to answer: Does it work?

Yes, it does, but... it also requires some fine tuning and interaction with the Assistant itself once created during the configuration. That's why I purposely left it as a beta so far.

Have a look at some screenshots from the interactions with the assistant:

More to come... drop any questions here or in a DM if you have any, don't hesitate.

Thanks for reading!

-Juergen